Learning Management

A Scary AI Story (and How to Avoid AI Nightmares)

We had a positively spine-tingling revelation earlier this week reported by one of our technical gurus.

He was using AI to dig into the details of a particularly complex project and came across a response that stopped him dead….

In short, ‘Mr Chat’ had responded to a query with information that revealed ‘insider knowledge’ – right down to code level – of a partner’s software platform.

Now, this is software we’ve worked with day in, day out, for over a decade… but scarily, ChatGPT knew more than we did. In fact, ‘it’ knew things that no one – other than the authors of the software – should ever be privy to.

So how did ‘Mr Chat’ get access to that information?

The only explanation we could think of was that a developer at our partner’s company might have used ChatGPT either to generate or to troubleshoot code.

This revelation totally spooked us: If AI platforms can surface details like this, what else might they be revealing to the world at large?

ChatGPT (and other AI assistants) have quickly become the ‘go to’ aid for writing, analysing, and problem-solving – not just for developers, but for sales teams, marketers, analysts, project managers, and IT teams.

Yet using AI without care could be exposing far more than you realise: from proprietary processes and configuration details to customer data and perhaps even trade secrets.

Could using AI within your company turn into a horror story? Here are 5 things you can do to avoid such nightmares.

1: Have an AI Policy

High on your list of priorities should be to have an acceptable use policy. In other words, make it clear to your workforce where and how AI should and shouldn’t be used.

Situations like this highlight the need for clear, ‘set in stone’ rules.

For example, did you see in the news the other day that Deloitte has been fined for generating a paid-for report that contained AI-generated errors?

For example, in Deloitte’s case: ‘No AI-generated content should be released externally without human review and authorisation.‘

You would almost certainly want to emphasise the fact that AI can be prone to ‘hallucinations’ with a warning that says:

‘Don’t take whatever AI tells you as being ‘gospel’: Check, and double check!‘

Publish your set of acceptable use policies and consider going one step further by making sure that all concerned have ‘read and understood’ them – perhaps with a quiz that must be passed and a box that needs ticking. By doing this you can create accountability and reinforce its importance. You can also prove due diligence should something ‘go awry’.

We’ll be providing an example of an AI Policy in a future article, so make sure you subscribe to our newsletter using the link at the top of this page.

2: Get Your Workforce Some AI Training

Older folk like me need lots of hand-holding on new stuff, whereas Millennials and Gen Z are more likely to jump in and instinctively get to grips with what AI can do for them – without fear or hesitation.

Regardless of the demographics in your workforce, training is good and here are some things you might want to include in your mandatory AI training:

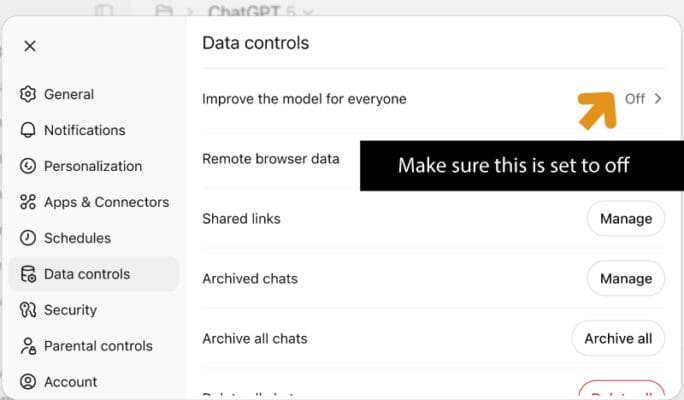

Privacy settings – Ensure everyone in your company knows how to ensure the relevant data protection settings are in place. I must admit – I use a ‘paid-for’ version of ChatGPT for my own personal use and assumed (wrongly) that my data would be mine!

It turns out that even with a paid-for version of ChatGPT – you need to proactively throw a switch to stop it from using the information you supply for ‘training purposes’ on a worldwide basis! See our next point below about licencing an enterprise AI platform.

AI prompt building – I find ChatGPT still manages to come up with the goods due to its conversational style (making up for any dodgy prompts on my part). By comparison, Copilot needs more precision and careful prompt creation. Making sure your workforce knows how to get the best out of your ‘official enterprise AI platform’ with the correct prompts is essential. It will also help stop people straying to the dark side and using unauthorised AI platforms because they don’t know how to use Copilot correctly and get frustrated.

AI transparency – Sometimes it’s obvious to see when AI has been used by a co-worker: The repetition, the bullets, the ‘m’ dashes (these are a sure-fire giveaway).

Either way, you should make it clear to your workforce that they ‘should not pass off AI-generated content as entirely their own work or use AI tools in ways that could mislead colleagues, management, or customers about authorship or originality’.*

*Full disclosure: I used AI to generate the bit in italics!!

Understanding the risks – Outline where you see the risks to your business to be and give specific use cases.

This may even be a straightforward risk to your reputation and customer and partner relationships.

As I said earlier, most of us can detect when someone’s sending us information that has been AI-generated.

Don’t you feel a bit ‘short changed’ (like I do) when someone sends you an obviously AI-generated email or document??

Essential prides itself on the fact that we have a very human face and want to maintain that personal touch for all our customers – I’m sure your organisation feels similarly.

When you’re rolling out AI training, we suggest you take a role-specific approach:

- Does the accounts team need as much AI training VS the sales and marketing team?

- What AI tools are built into the role-specific tools your various teams are using? For example, our Hubspot sales platform has its own AI capability. Making sure these teams are specifically goaled on understanding how to get the most out of these tools will ensure you get the most out of your investment.

3: Don’t be Afraid of AI

Rather than trying to keep AI locked in the closet – get it out into the open!

Identify useful AI tools – Instead of shutting down every new tool and risk pushing it into dark corners – start by understanding what’s already in use and where it can genuinely boost productivity.

For example, the engineering company my nephew works for holds a monthly ‘show and tell’ session that looks at the new AI tools folk have found useful. From there they assess their potential, and decide where they can safely add value.

This kind of structured, open approach not only encourages innovation but also brings AI usage into the light, making it easier to govern responsibly.

Invest in enterprise-grade solutions – Provide licensed, approved AI platforms that meet your confidentiality and compliance requirements. For example, if employees love ChatGPT then get an enterprise plan, but make sure you dig into the T’s and C’s or what happens to any archived content, and ask questions like:

- Which data is stored in-region vs might be processed elsewhere?

- How long it is retained or archived?

- What happens to user accounts when personnel leave?

- Whether any chat history or output remains outside your organisation’s control?

If you don’t get satisfactory answers, then look at Copilot. By comparison, it offers more control over your data, and once you’ve got the prompts cracked, it’ll give you more opportunities for leveraging its integrations with the Microsoft 365 stack – including Word and Microsoft Places.

4: Avoid the Scary Costs Associated with AI

Could inbuilt AI be leading to additional monthly costs or points subtracted from a subscription that you weren’t banking on?

Check out the all the AI tools you use – especially those that are built into line of business applications. For example, our Hubspot CRM subscription has a monthly quota of ‘AI points’, which are chargeable if my team goes over them.

Review these things:

- What’s included in your subscription plan?

- Can you ‘cap’ things so that you don’t go over your quota and start getting unexpected costs?

- What happens if you go over your subscription plan?

AI training can also save you LOTS of money.

For example, bad AI prompts could lead to a whole load of useless ‘results’ that will chomp through your quotas. A bit of prompt-writing skills training will ensure you only pay for ‘valuable outcomes’.

5: Exorcise the Ghosts from Within

Before you invite AI deeper into your organisation, make sure your own house isn’t haunted….(am I taking this Halloween thang too far???)

Data Governance – If you’re using tools like Copilot internally – you’ve got protection from exposing your data to the outside world – but you still need to worry about over-sharing data internally.

You can help prevent this from happening by cleaning out the cobwebs and skeletons from your ‘data cupboards’:

- Start by knowing what you’ve got and identifying where sensitive or confidential information lies.

- Make sure the right people have the right access – tighten permissions and remove old sharing links that give ‘too wide’ access – also regularly review who can see what. Check out our free service to help you do this.

- Set up rules so that old data that’s no longer needed is archived or deleted automatically and therefore not exposed in or ‘tainting’ your AI results.

You can read more around these areas in points 7 and 8 of this article.

Activity Monitoring – While you can’t block everything, there’s some proactive things the IT teams can do. For example, if a laptop is managed under your company’s Microsoft Defender for Endpoint setup, it automatically sends activity data to the cloud so you can see which apps are being used, even when working from home.

For personal or unmanaged devices, you can apply Microsoft Entra conditional access app control rules that monitor or restrict what people can do in web sessions – for example, stopping them from uploading files or copying text to unapproved AI tools like ChatGPT or SORA.

Conclusion

AI can be scary but with a clear policy, proper training, good data governance and enterprise-grade tools, you can reduce the chance of accidental data exposure, loss of reputation and uncontrolled expenditure (let’s stop with the downsides already) whilst still extracting the goodness in AI for your business.

Deliver & track your AI learning, understanding and policy compliance in Microsoft 365

This is just one of the solutions we can offer to enterprises to ‘tame’ the AI beast…